Pandas DataFrame Tutorial - Beginner's Guide to GPU Accelerated DataFrames in Python | NVIDIA Technical Blog

Speedup Python Pandas with RAPIDS GPU-Accelerated Dataframe Library called cuDF on Google Colab! - Bhavesh Bhatt

GitHub - patternedscience/GPU-Analytics-Perf-Tests: A GPU-vs-CPU performance benchmark: (OmniSci [MapD] Core DB / cuDF GPU DataFrame) vs (Pandas DataFrame / Postgres / PDAL)

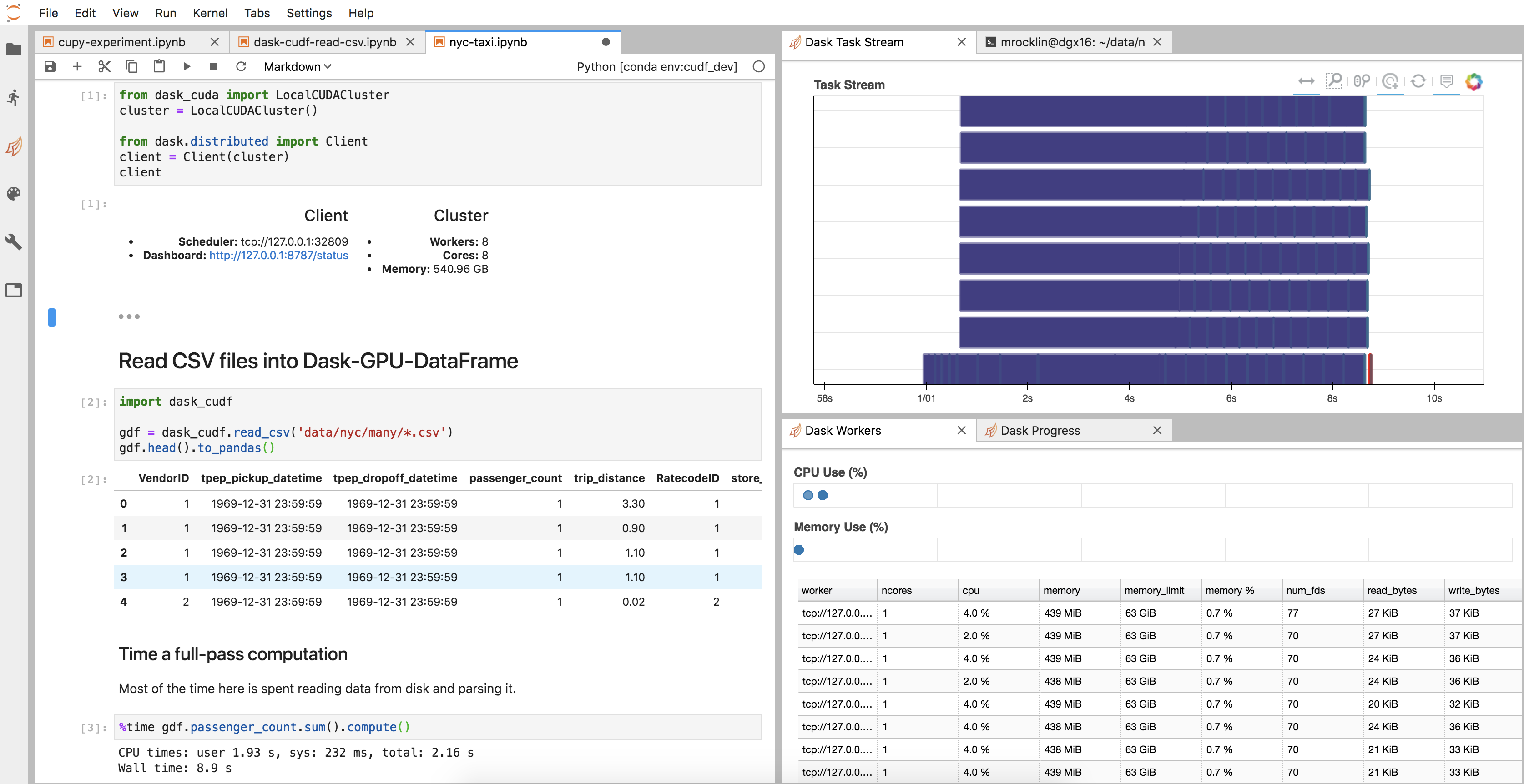

Pandas DataFrame Tutorial - Beginner's Guide to GPU Accelerated DataFrames in Python | NVIDIA Technical Blog

Pandas DataFrame Tutorial - Beginner's Guide to GPU Accelerated DataFrames in Python | NVIDIA Technical Blog

Python Pandas vs. Vaex Dataframes: A Comparative Analysis | by Ulrik Thyge Pedersen | Apr, 2023 | Towards AI